Sitemaps serve as vital guides directing Google towards the most critical pages on your website that require indexing. While numerous methods exist for creating sitemaps, integrating them into the robots.txt file emerges as one of the most effective strategies for ensuring visibility to Google.

In this comprehensive guide, we’ll walk you through the seamless process of incorporating sitemaps into your robots.txt file using Rank Math. Additionally, we’ll explore the myriad benefits of integrating a sitemap and how it contributes to elevating your website’s search engine ranking.

If you’re part of a marketing team or involved in website development, securing your site’s visibility in search results is likely a top priority. To feature prominently in search results, it’s imperative for your website and its various pages to be crawled and indexed by search engine bots (robots).

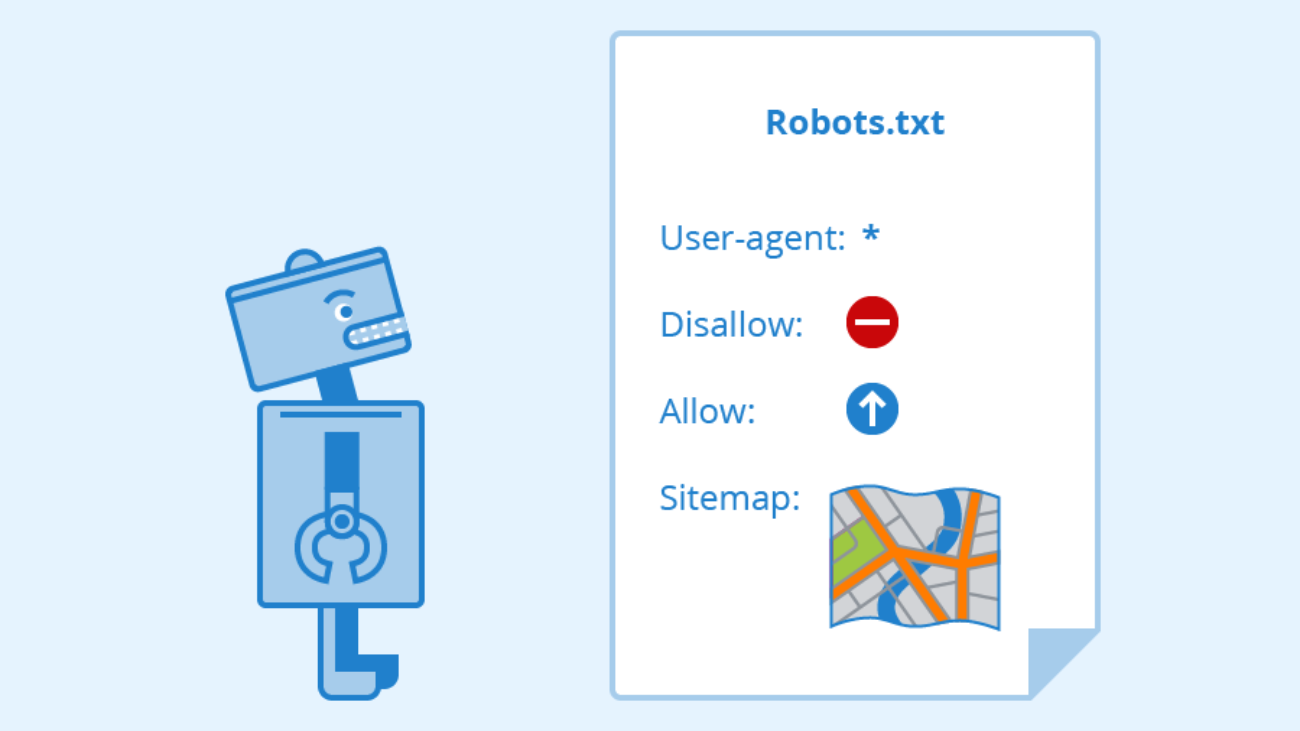

On the technical side of your website, two distinct files play pivotal roles in assisting these bots in locating essential information: Robots.txt and XML sitemap.

What is an XML sitemap?

An XML sitemap serves as an integral component embedded within your website, furnishing vital insights to search engines regarding the structure of your site’s content. Utilizing XML, or Extensible Markup Language, this file format facilitates the dissemination of essential data. Essentially, a sitemap is a meticulously crafted XML document that meticulously enumerates the URLs associated with a site. It bestows upon the webmaster the ability to enrich each URL with supplementary details, including the last update timestamp, frequency of changes, and its relative importance compared to other URLs on the site. This comprehensive dataset empowers search engines to traverse your site intelligently, optimizing the crawling process.

XML SITEMAP

Step 1: Integrating Sitemap URL into Your Robots.txt

By default, Rank Math seamlessly incorporates a set of rules, encompassing your Sitemap, into your robots.txt file. However, you retain the flexibility to tailor and augment the code to align with your preferences utilizing the provided text area.

Within this box, input the URL of your sitemap. The URL configuration varies for each website. For instance, if your website is example.com, the sitemap URL would manifest as example.com/sitemap.xml.

In scenarios involving multiple sitemaps, such as a video sitemap, inclusion of the respective URLs is imperative. Instead of enumerating individual URLs, consider integrating the Sitemap Index. This strategic approach enables search engines to retrieve all individual sitemaps from this centralized location. Such an approach proves invaluable when incorporating or removing sitemaps, eliminating the need for manual adjustments to your robots.txt file.

Example Reference URLs:

Sitemap: https://example.com/sitemap.xml

Sitemap: https://example.com/post-sitemap.xml

Sitemap: https://example.com/page-sitemap.xml

Sitemap: https://example.com/category-sitemap.xml

Sitemap: https://example.com/video-sitemap.xml

Alternatively:

Sitemap: https://example.com/sitemap_index.xml

Step 2: Locating Your Robots.txt File

To confirm the presence of a robots.txt file on your website, append /robots.txt after your domain, such as https://befound.pt/robots.txt.

Should your website lack a robots.txt file, creation of one becomes imperative, positioning it within the root directory of your web server. Access to your web server is pivotal for this endeavor, typically within the same domain as your site’s primary “index.html” file. The precise location varies contingent upon the type of web server software employed. If traversing these files proves daunting, enlisting the aid of a seasoned web developer is advisable. During the creation of the robots.txt file, ensure the filename adheres entirely to lowercase conventions (e.g., robots.txt), eschewing any uppercase variations like Robots.TXT or Robots.Txt.

Step 3: Embedding Sitemap Location Within the Robots.txt File

Access the robots.txt file nestled at the root of your site. This undertaking mandates access to your web server; therefore, if navigating the location and editing of the robots.txt file eludes you, consulting a proficient web developer or your hosting provider is recommended.

To facilitate the auto-discovery of your sitemap file through robots.txt, embed a directive containing the URL in the robots.txt file, as exemplified below:

Sitemap: http://befound.pt/sitemap.xml

User-agent: *

Disallow:

This configuration transforms the robots.txt file into the following manifestation:

Sitemap: http://befound.pt/sitemap.xml

User-agent: *

Disallow:

Note: The directive specifying the sitemap location is flexible in its placement within the robots.txt file, independent of the user-agent line. Its positioning within the file does not impede its functionality.

To witness this functionality in real-time on a live site, navigate to your preferred website and append /robots.txt to the domain, such as https://befound.pt/robots.txt.

What If You Have Multiple Sitemaps?

According to the sitemap guidelines laid out by Google and Bing, XML sitemaps must comply with certain restrictions. These guidelines stipulate that sitemaps should not contain more than 50,000 URLs and must not exceed 50 MB in size when uncompressed. For sprawling websites boasting an extensive array of URLs, the solution lies in creating multiple sitemap files.

Each of these sitemap files must be cataloged in a sitemap index file. Structured in XML format, the sitemap index file essentially operates as a compendium of sitemaps.

In instances where multiple sitemaps are employed, you possess the flexibility to designate the URL of your sitemap index file in your robots.txt file, as illustrated below:

Sitemap: http://befound.pt/sitemap_index.xml

Alternatively, individual URLs for each of your sitemap files can be provided, as depicted in the example below:

Sitemap: http://befound.pt/sitemap_pages.xml

Sitemap: http://befound.pt/sitemap_posts.xml

With these insights at your disposal, you now possess a comprehensive understanding of how to fashion a robots.txt file replete with sitemap locations. Seize this opportunity to optimize the performance of your website!